Types of Modules

Most complex systems, from factories to rockets to software programs, are broken into modular pieces to separate concerns, reuse design patterns, and integrate pre-built components. Like these systems, decision-making AI also works best when decisions are separated into modular concerns. Modular structure makes intelligent agents easier to build, test, and maintain.

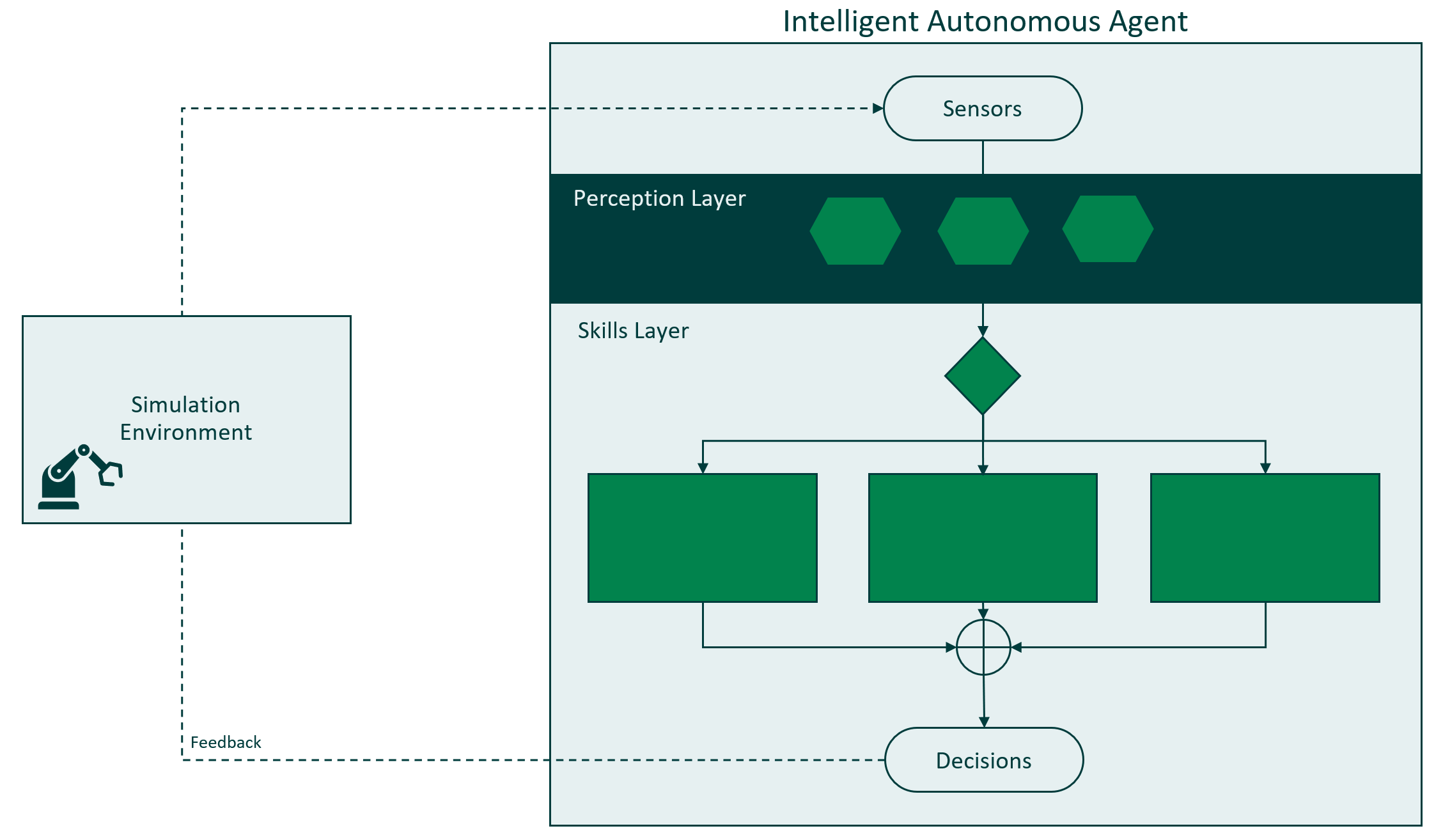

Composabl agents have three types of modules: perceptors, skills, and selectors.

Perceptors

The first layer of the agent is the perception layer. Sensors gather information, but the information often needs to be processed and translated into a format that can be used to make decisions. For example, our eyes are more than just sensors that receive light. The rods and cones in our eyes process the light and translate it into electrical signals that our brains can use to make decisions. Our ears perform a similar function after receiving a sound. Machines need more than sensors to make decisions.

Perception modules process information that come in through the sensors and send relevant information through to the decision-making parts of the brain. In other words, the perception layer of the agent inputs the sensor variables and outputs new variables deduced by calculation, machine learning, or other programming.

The perception layer of the agent inputs the sensor variables and outputs new variables deduced by calculation, machine learning, or other programming. For example, if we design an agent as an autopilot for a drone, you might have sensor variables that measure pitch, yaw, roll (position of the drone), velocity in each of those three directions, and acceleration in each of those three directions.

But what about stability? Stability is an important characteristic to understand while flying a drone, but there is no sensor variable that describes stability. It is too complex to be captured by a single sensor.

This is the purpose of the perception layer.

The perception layer allows us to create a variable for stability. It can be calculated using dynamics equations or trained with supervised machine learning. The new variable then becomes accessible to the rest of the agent along with the other sensor variables.

Here are some examples of perceptors:

- Computer Vision: A camera sensor passes image or video feeds into a perceptor module that identifies object types and locations

- Auditory Perception: A microphone sensor passes machine sounds to a perceptor module that identifies which state the machine is in based on the sounds that it is making

- Prediction: A perceptor module inputs quality measurements and past agent actions and predicts whether current actions will lead to acceptable quality measurements

- Anomaly Detection: A perceptor modules inputs market variables and detects when the market is changing regimes.

- Classification & Clustering: A perceptor module inputs machine and process data and classifies which of several conditions a manufacturing line is currently in

Skills Modules

Action skills decide and act. They take action to achieve goals in key scenarios where your agent needs to succeed.

The skills layer is where your agent will make decisions about how to control the system. When a specific skill is activated, it will determine the control action the system should take.

You can imagine skills being like students on a math team who are working together to complete a set of problems. Each student performs best solving a particular kind of problem: one is good at fractions and one at decimals. Depending on the type of problem, the appropriate student will use their expertise to solve the problem and produce the answer for the team.

Just as different students’ capabilities make them able to solve particular problems, different skills may make use of different technologies. Some types of decisions are best approached through skills that can be programmed with mathematical calculations, rules, or optimization algorithms. Others that are more complex and nonlinear can be trained using deep reinforcement learning.

Skills Examples

For an HVAC system regulating temperature in an office building:

- Control the system during the day

- Control the system at night

For a factory where responses are needed to different types of alarms:

- Handle safety critical alarms (programmed with rules)

- Handle simple alarms (programmed with calculations)

- Handle complex alarms (learned with reinforcement learning)

For a drone autopilot:

- Stabilize

- Get to landing zone

- Land

- Avoid obstacles

For a robotic arm used to grab and stack objects:

- Reach (extend the robot arm from the "elbow" and "wrist")

- Move (move the arm laterally using the "shoulder)

- Orient (turn the "wrist" to position the "hand")

- Grasp (Manipulate the "fingers" to clamp down)

- Stack (Move laterally while grasping)

Selector Modules

Selector skills are the supervisors for your agent. In the math class analogy, the selector would be like the teacher. The teacher assesses the type of problem and assigns the right student.

In an intelligent agent, a selector module will use information from the sensors and perceptors to understand the scenario and then determine which skill is needed. Once the action skill is called into service, it makes the decision for the brain.

For example, in the HVAC example, a selector skill would determine whether day or night control is needed, and then pass control to the appropriate skill. In the safety alarm example, the selector determines the type of alarm and then passes the decision to the right skill. In the drone and robotic arm examples, the skills need to be performed in sequence. In these cases, the selector determines where in the process the agent is and assigns the appropriate skill as needed.